Endpoint for CentML

This guide helps you deploy a FLUX endpoint on CentML using a pre-built Docker image or by building and pushing your own.Docker Image

- Use the pre-built image: vagias/base-api:v1.0

- Alternatively, build your own image locally and push it to Docker Hub.

Building the Image

- For macOS

- For Linux

Deploying on CentML

- Log in to CentML Access the CentML dashboard at https://app.centml.com.

- Navigate to General Inference From the Home page, go to General Inference to set up the deployment.

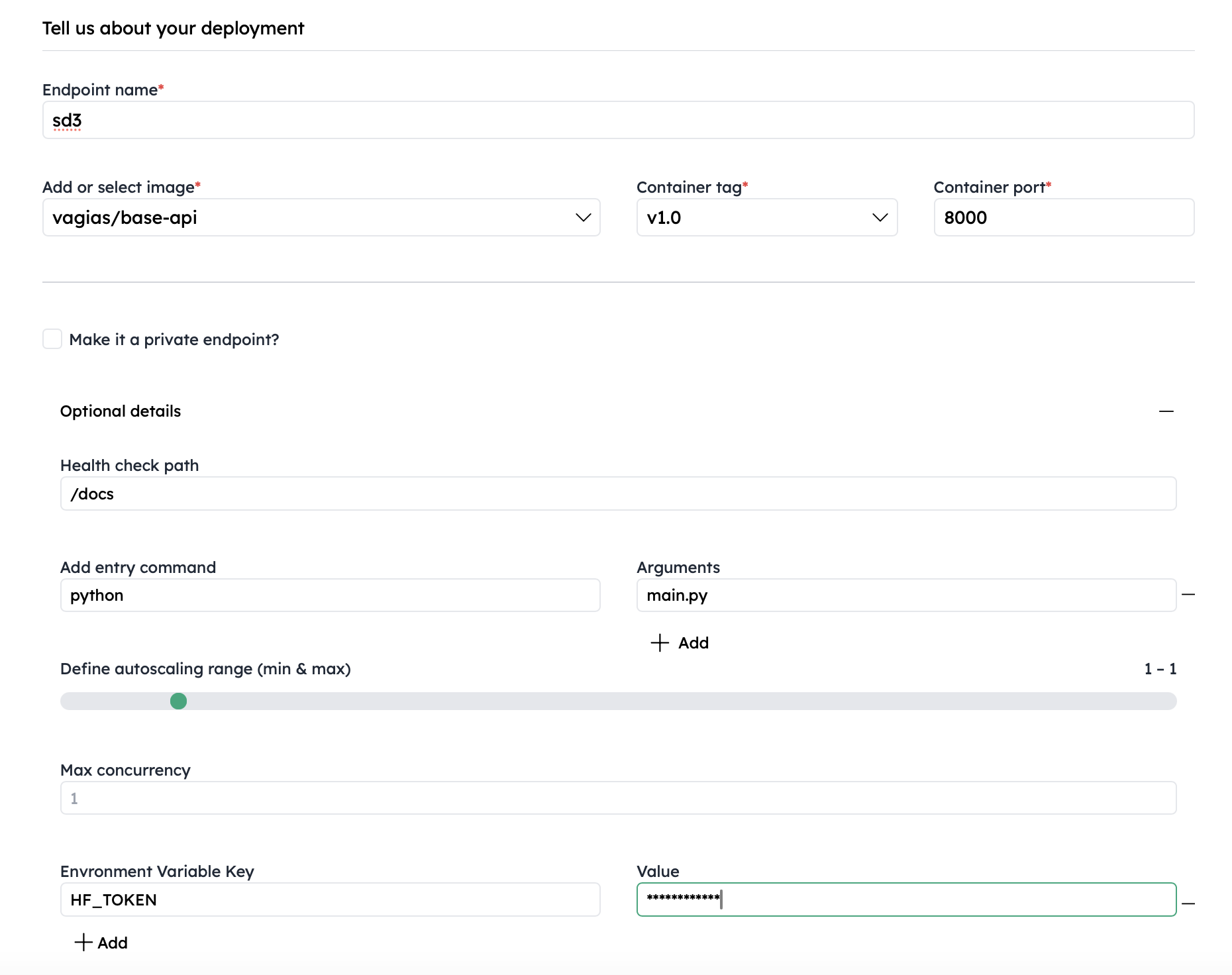

- Fill in Deployment Details Provide the necessary details, including:

- Docker image name

- Tag (e.g., v1.0)

- Port number

- Health check path

- Entry point arguments

- Your HF_TOKEN (Hugging Face API token) for authentication

Example:

- Select Resource Size Choose the resource size based on your image generation speed requirements:

- Small

- Medium

- Large

- Click Deploy

endpoint URL.

Interacting with the Endpoint

Once deployed, you can interact with the endpoint via:

- curl commands

- The included apps and examples