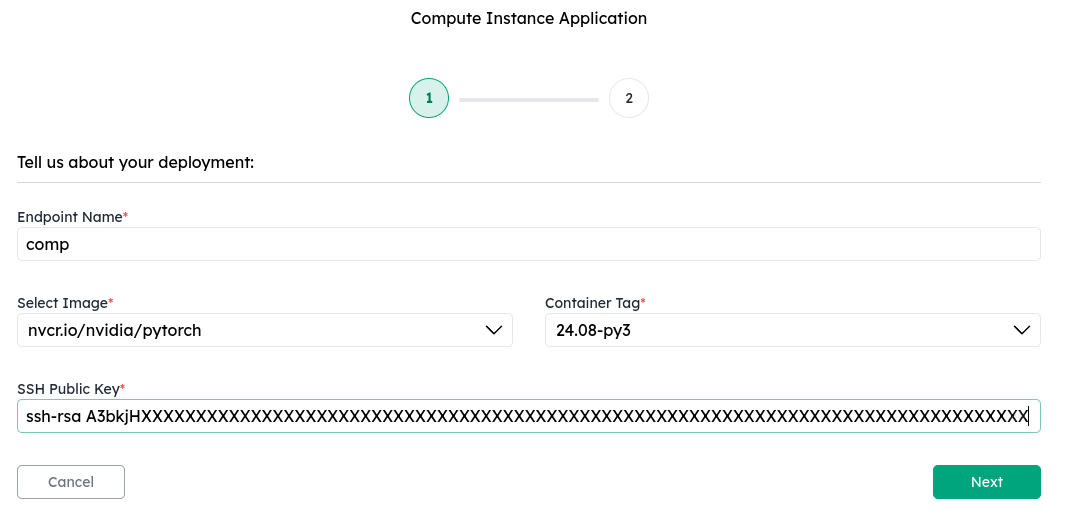

1. Select a base image

Spin up a compute instance with your preferred base image (e.g. PyTorch). Enter your ssh public key to configure access to the instance.

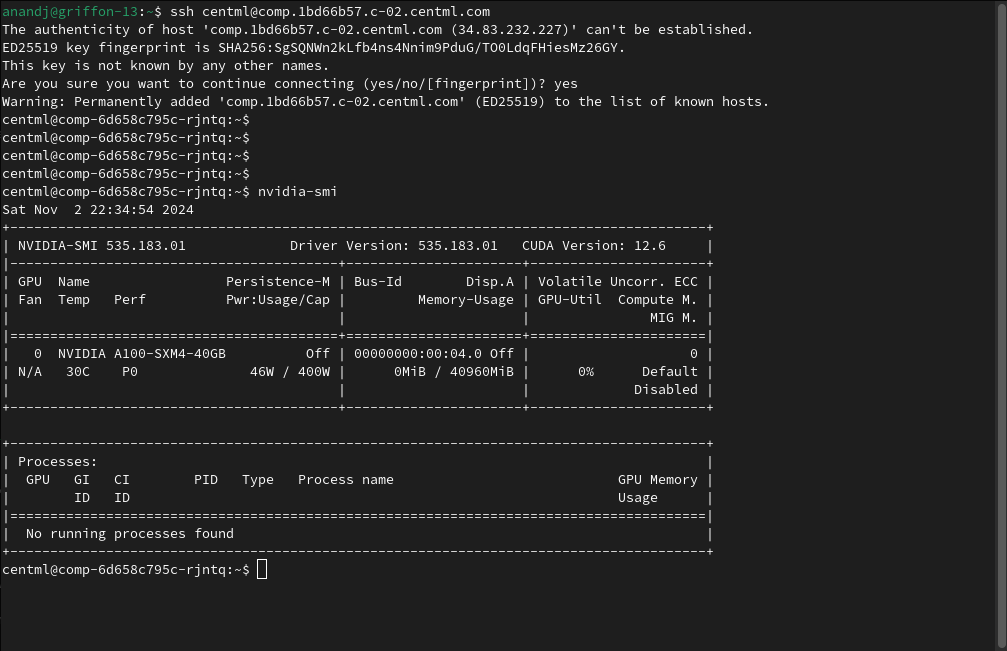

2. SSH into the instance

Once the instance is ready, ssh into the endpoint url provided in the deployment details page with usernamecentml.

What’s Next

LLM Serving

Explore dedicated public and private endpoints for production model deployments.

Clients

Learn how to interact with the CentML platform programmatically

Resources and Pricing

Learn more about the CentML platform’s pricing.

Private Inference Endpoints

Learn how to create private inference endpoints

Submit a Support Request

Submit a Support Request.

Agents on CentML

Learn how agents can interact with CentML services.