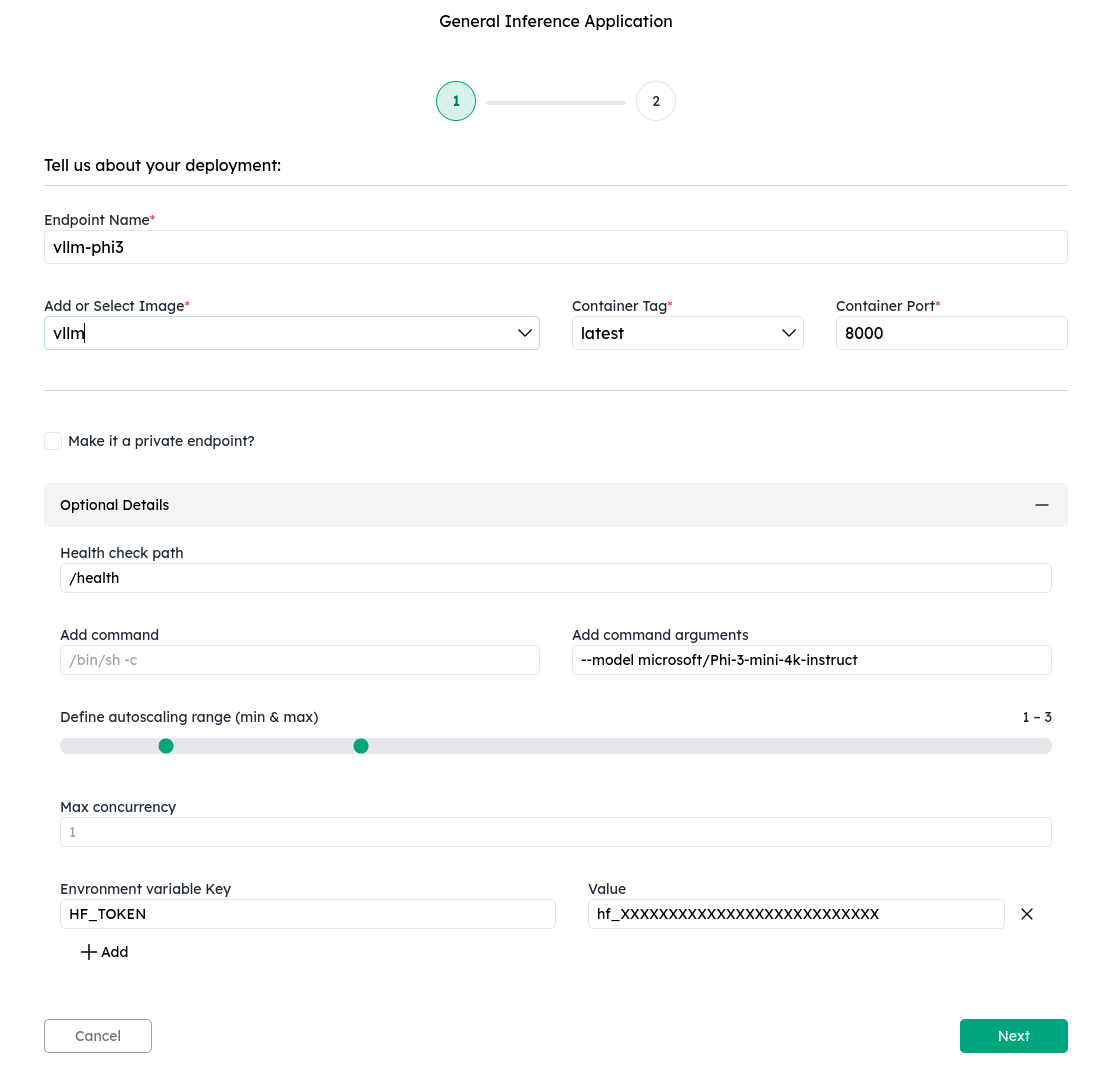

1. Configure your inference deployment

Use the Add or Select Image dropdown to choose a pre-configured recipe or manually enter a custom image URL. Similarly, use the Container Tag dropdown to pick an available tag or type one in directly. If you select a recipe, all fields except Add command are auto-filled (some may be empty). You will still need to provide the entrypoint command under Optional Details → Add command. At a minimum, you need to specify:- Add or Select Image — the container image URL (select from dropdown or enter manually).

- Container Tag — the image tag (select from dropdown or enter manually).

- Container Port — the port your container exposes its HTTP or gRPC service on.

- Protocol — select

HTTP(default) orgRPC. - Health check path — the endpoint used to verify readiness (e.g.,

/health,/). For gRPC deployments, CCluster uses TCP socket checks automatically, so this field is ignored. - Image Registry Username / Password — required only if the image is hosted on a private registry (e.g., Docker Hub private repo). You can also use credentials stored in your Vault.

- Add command — entrypoint command and arguments. If left empty, the image’s default entrypoint is used.

- Autoscaling — set the min and max scale for your deployment. CCluster scales replicas based on max concurrency (maximum in-flight requests per replica). Default is infinity.

- Environment variables — pass additional environment variables to the container (e.g.,

HF_TOKEN).

Supported inference engine recipes

CCluster provides pre-configured recipes for popular inference engines in the Add or Select Image dropdown. Selecting a recipe auto-fills all fields except the entrypoint command. Below are the Add command values you need to provide for each engine:- vLLM —

python3 -m vllm.entrypoints.openai.api_server --model <HF_REPO_NAME> - SGLang —

python3 -m sglang.launch_server --model-path <HF_MODEL_REPO> --host 0.0.0.0 - LMDeploy —

lmdeploy serve api_server <HF_REPO_NAME> --server-name 0.0.0.0 - Ollama — leave Add command empty. See the Ollama-specific note below.

Ollama does not require a custom entrypoint. After the deployment is ready, you need to pull a model before it can serve requests:

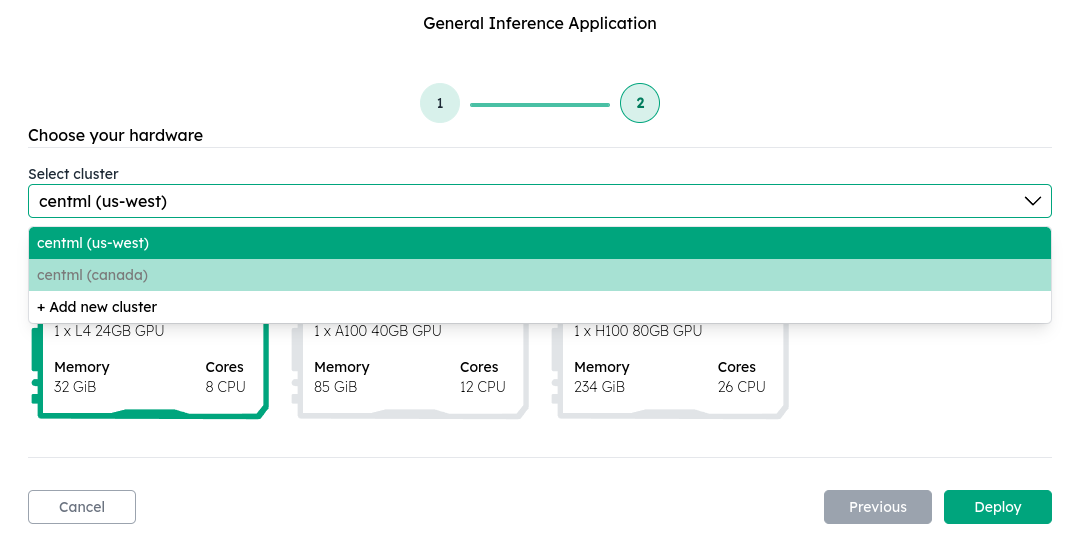

2. Select the cluster and hardware to deploy

By default, NVIDIA CCluster provides several managed clusters and GPU instances for you to deploy your inference containers.

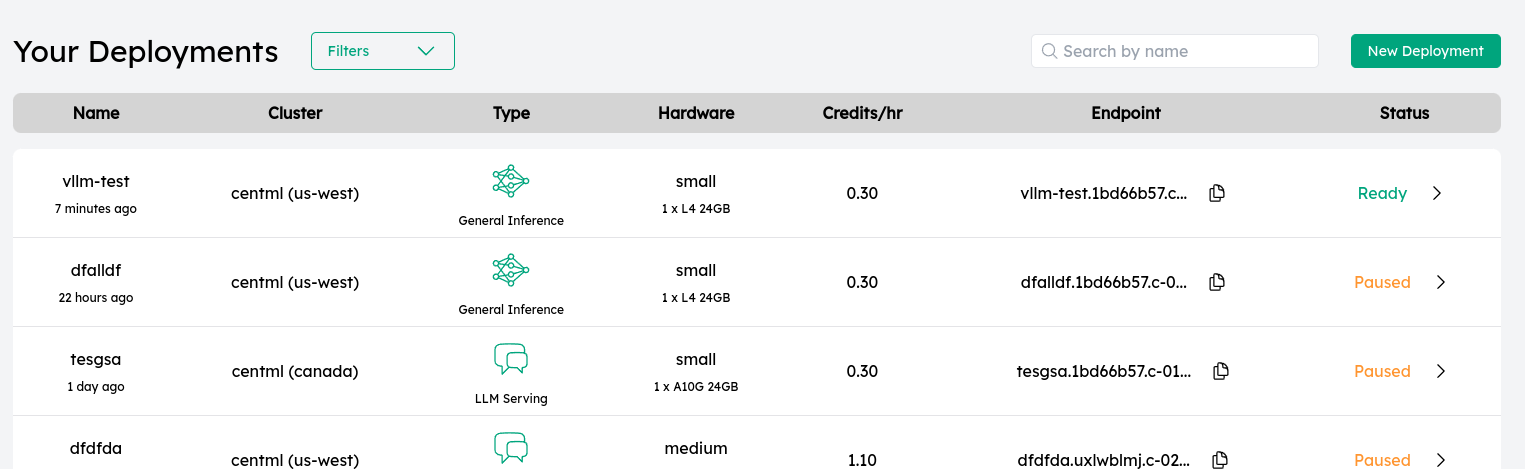

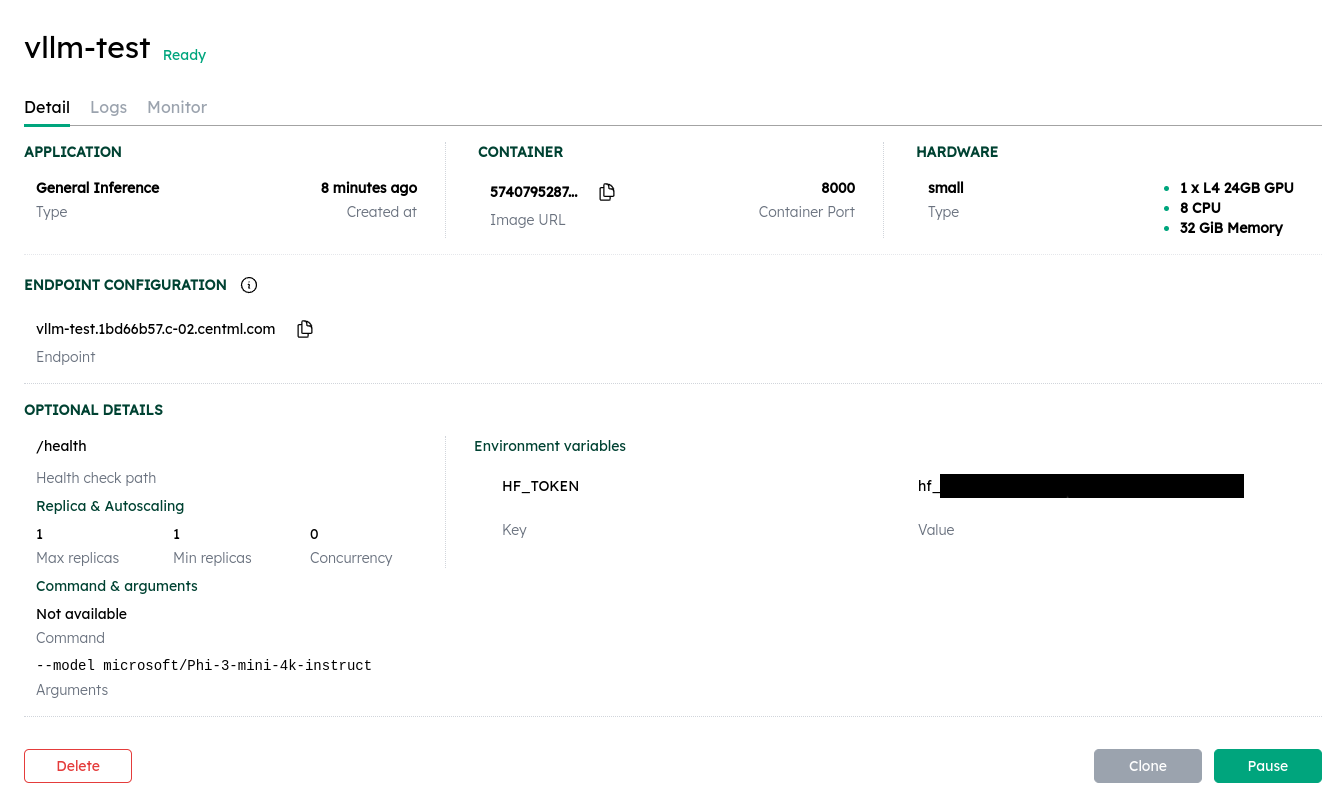

3. Monitor your deployment

Once deployed, you can see all your deployments under the listing view along with their current status.

Accessing your endpoint

All deployments are exposed externally on port 443 with TLS enforced. CCluster automatically provisions TLS certificates for your endpoint - no additional configuration is required.| Protocol | Access URL | Description |

|---|---|---|

| HTTP | https://<endpoint_url> | Standard HTTPS access (port 443 is implicit) |

| gRPC | <endpoint_url>:443 | gRPC with TLS enabled |

The container port you configure is used internally within the cluster. CCluster’s ingress layer handles TLS termination on port 443 and forwards requests to your container’s internal port.

What’s Next

LLM Serving

Explore dedicated public and private endpoints for production model deployments.

Clients

Learn how to interact with the NVIDIA CCluster programmatically

Resources and Pricing

Learn more about the NVIDIA CCluster’s pricing.

Private Inference Endpoints

Learn how to create private inference endpoints

Submit a Support Request

Submit a Support Request.

Agents on CentML

Learn how agents can interact with CentML services.