1. Log into the CentML Platform

To access a CentML serverless endpoint, you need to log in to the CentML platform. To do so, navigate to https://app.centml.com in your browser and create a CentML user account or log in with your account’s credentials if you already have one.

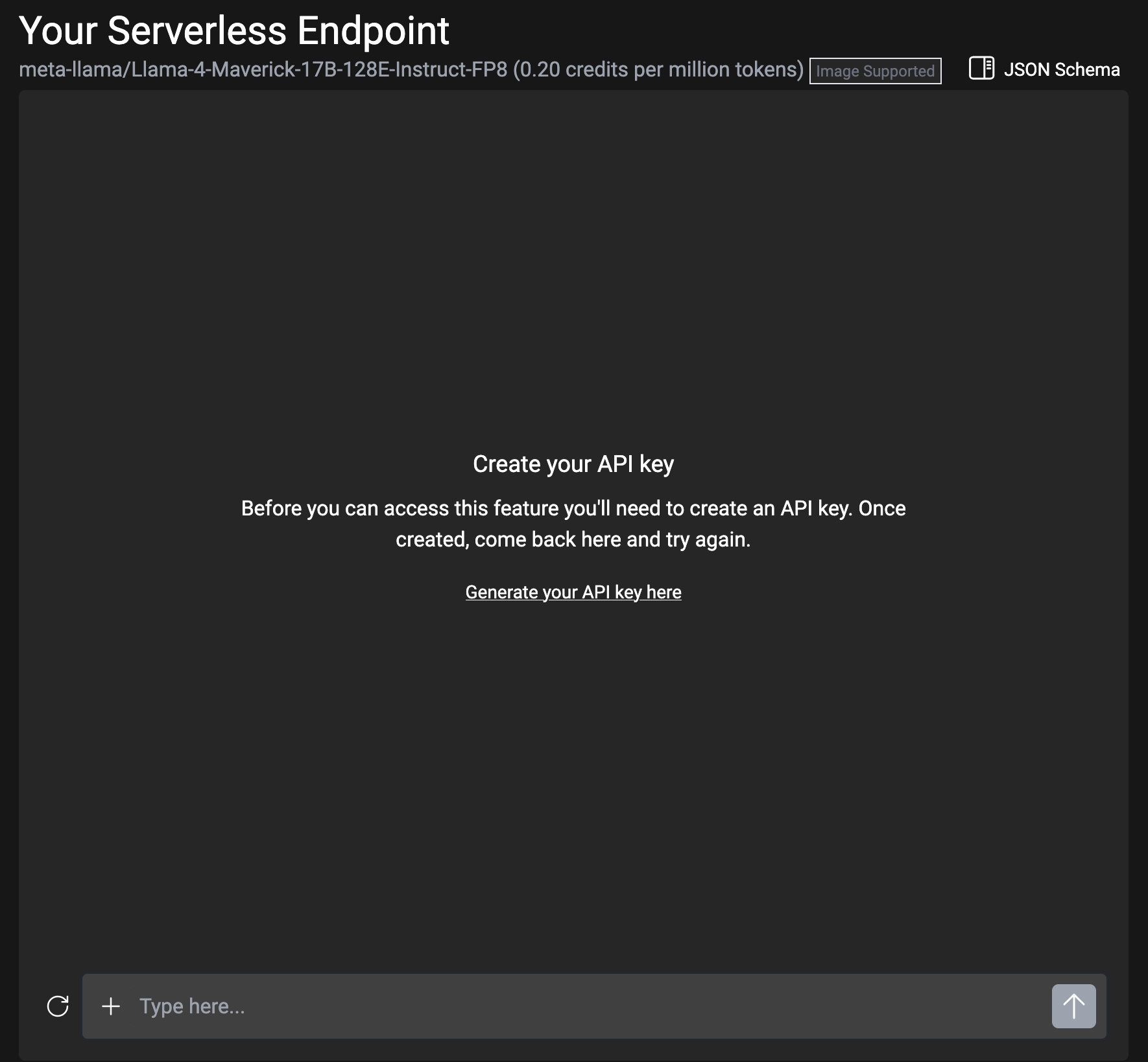

2. Create an API key

When accessing a CentML Serverless endpoint, you must authenticate using a Serverless API key even if you are using the chat UI. By default, you should have a serverless API key already generated for you on account creation. If not, you may see errors similar to below.

3. Begin Querying Your Desired Large Language Models

Accessing the Chat UI

To access the chat UI, select theServerless option from the sidebar menu.

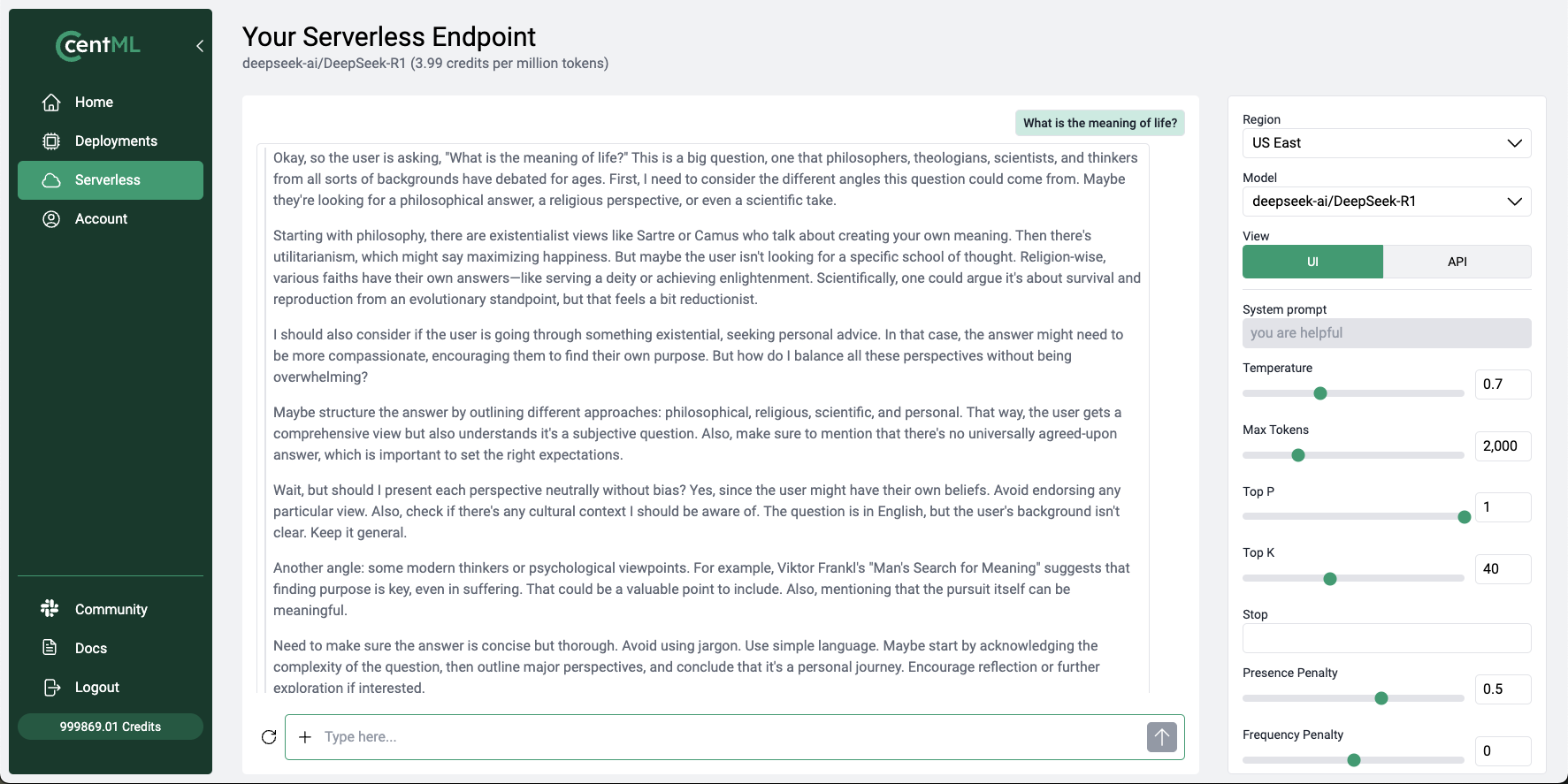

Configure, Prompt, and Submit

Once you are on the appropriateYour Serverless Endpoint page, follow the instructions below to submit a request to the model.

- Configure the endpoint settings (right side of the screen) to fit your testing needs. The configuration menu is where you can select the model you’d like to leverage via the chat interface.

- Enter a prompt into the textbox at the bottom of the screen.

- Select the arrow (pointing up) to submit the prompt to the model.

Advanced Serverless Usage and Considerations

For more advanced Serverless topics such how to access the API programmatically and request an additional model, please view our Serverless Endpoints documentation.Additional Support: Billing, Sales, and/or Technical

For billing or sales support reach out tosales@centml.ai.

You can also fill out a support request by following our Requesting Support guide. Support requests are not limited to sales and billing. They can include technical support, new model requests, and more. Please do not hesitate to reach out! We’re here to help!

What’s Next

Agents on CentML

Learn how agents can interact with CentML services.

Clients

Learn how to interact with the CentML platform programmatically

Resources and Pricing

Learn more about CentML Pricing

Get Support

Submit a Support Request

CentML Serverless Endpoints

Dive deeper into advanced serverless configurations and patterns.

LLM Serving

Explore dedicated public and private endpoints for production model deployments.