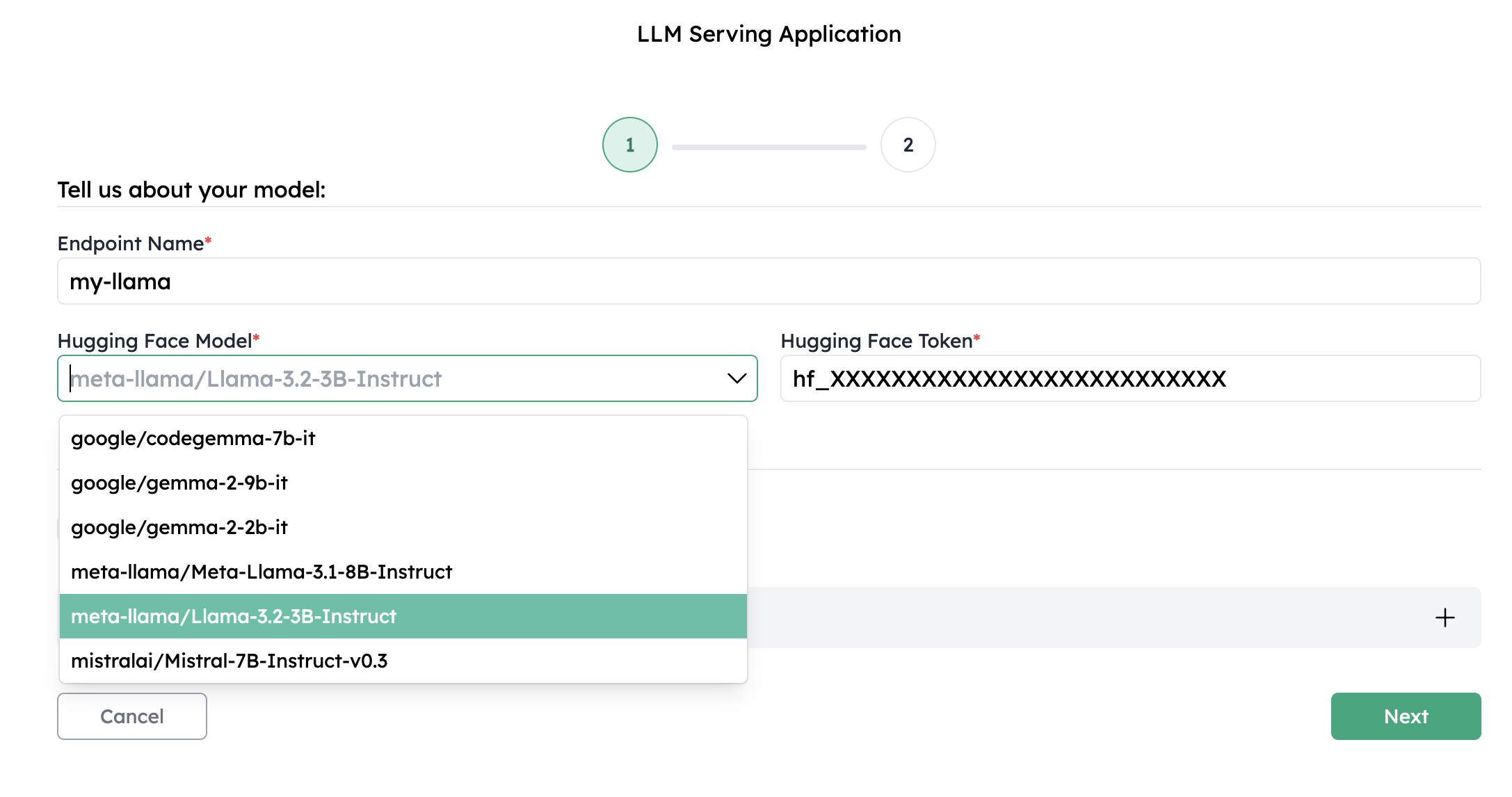

1. Choose your LLM

Select or enter the Hugging Face model name of your choosing and provide your Hugging Face token. Also provide a name for the dedicated endpoint you are going to deploy.

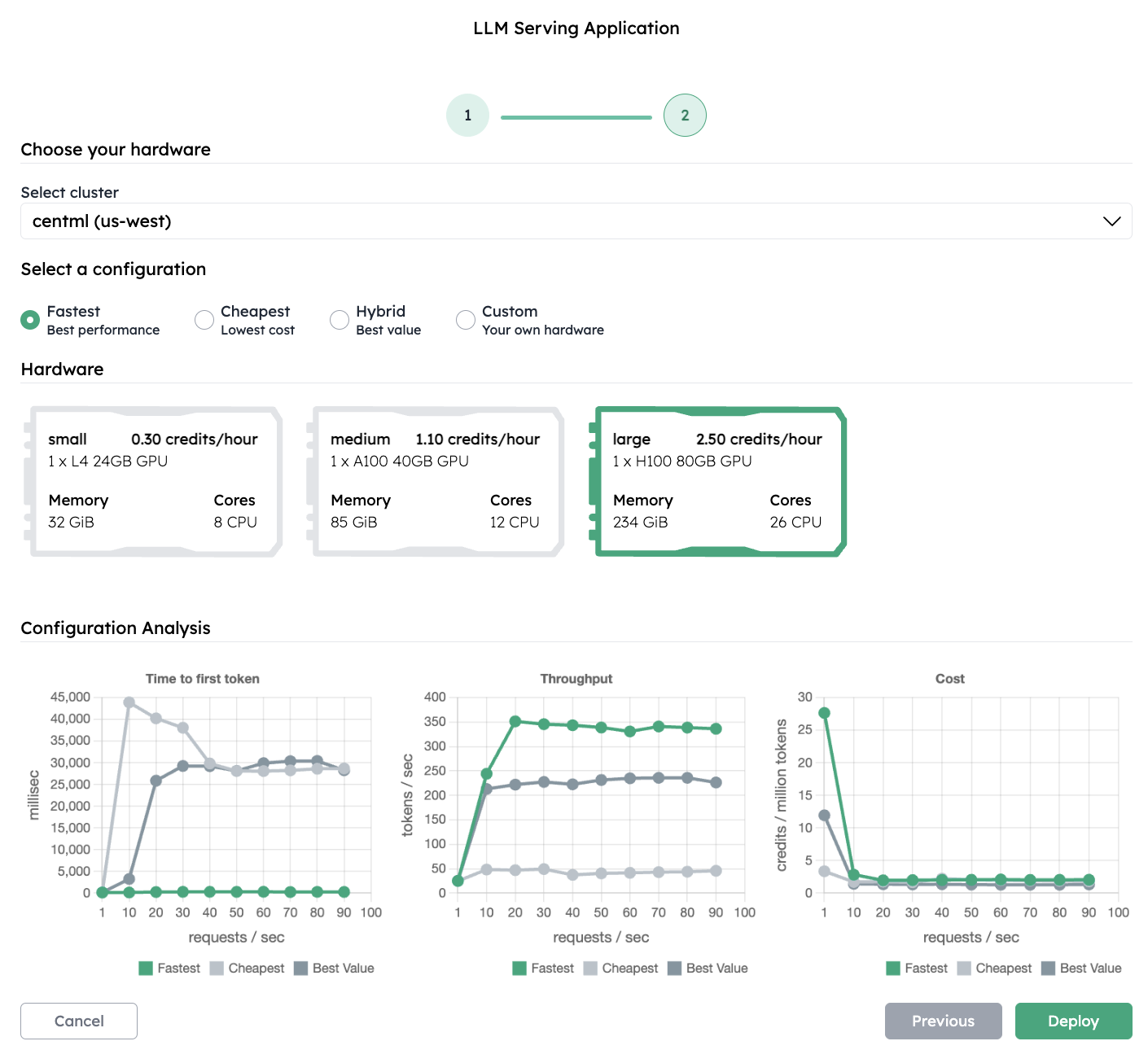

2. Plan and optimize

Choose the cluster or the region you want to deploy the model. Based on that, CentML presents three pre-configured deployment configurations to suit different requirements:

- Best performance: A configuration optimized for latency and throughput, suitable for high-demand applications where performance is critical.

- Lowest cost: A cost-effective configuration designed to minimize expenses, ideal for non-critical applications with lighter usage.

- Best value: A balanced configuration offering a mix of performance and cost efficiency, tailored to provide an ideal trade-off for general usage.

- Time to first token: Indicates the latency between sending a request to a language model and receiving the first piece of its response.

- Throughput: Measures the number of requests the model can handle per second.

- Cost per token: Shows the cost of generating a million tokens.

(Optional) Performance customization

For advanced users, CentML Platform also offers an option to customize their model performance configuration. Simply click the “Custom” configuration to gain full control over several tunable parameters.3. Deploy and integrate

Finally, click “Deploy”. Once the deployment is ready in a few minutes, copy the endpoint url and go tohttps://<endpoint_url>/docs to find the list of API endpoints to start using your LLM deployment. We offer API compatibility with CServe, OpenAI, and Cortex, making integration with other applications seamless.

What’s Next

The Model Integration Lifecycle

Dive into how CentML can help optimzie your Model Integration Lifecycle (MILC).

Clients

Learn how to interact with the CentML platform programmatically

Resources and Pricing

Learn more about the CentML platform’s pricing.

Private Inference Endpoints

Learn how to create private inference endpoints

Submit a Support Request

Submit a Support Request.

Agents on CentML

Learn how agents can interact with CentML services.